The Sounds of COVID

Millions of people already use Fitbit and other devices to monitor and track a variety of personal health metrics. Now, a researcher at the University of California San Diego has published data indicating that analyzing voice signals can detect COVID-19 infection when compared with sample audio clips from healthy speakers.

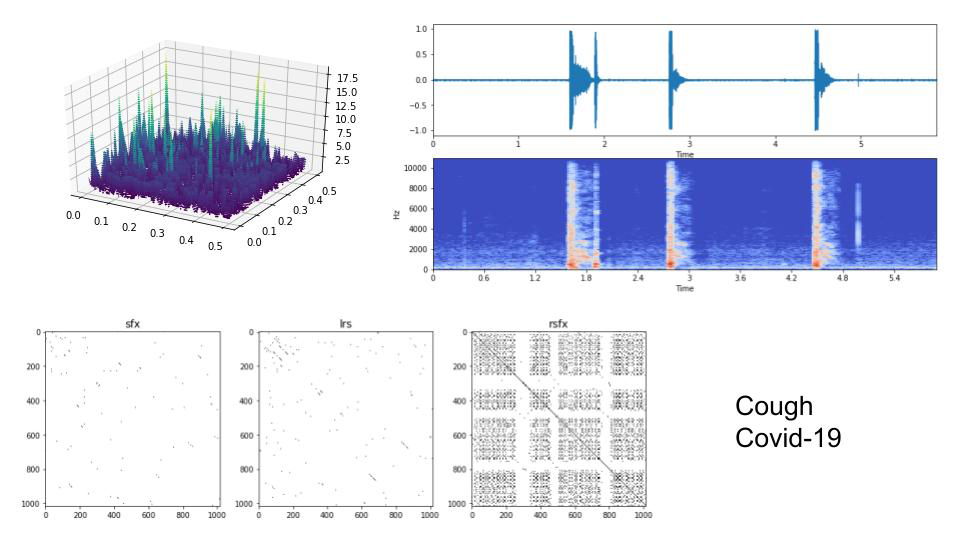

In a research paper* on “Robust Detection of COVID-19 in Cough Sounds,” published in the February 2021 issue of Springer Nature’s SN Computer Science journal, UC San Diego music and computer science professor Shlomo Dubnov and colleagues spell out initial results of their work analyzing samples of coughs and utterances from patients diagnosed with the SARS-Cov2 virus against a control group of healthy subjects.

“This work displays high potential for evaluating and automatically detecting COVID-19 from web-based audio samples of an individual’s coughs and vocalizations,” said Dubnov, who co-authored the paper with Lebanese American University professor Pauline Mouawad and recent Jacobs School of Engineering alumnus Tammuz Dubnov. “We showed the robustness of our method in detecting COVID-19 in cough sounds with an overall mean accuracy of 97 percent.” With samples of the sustained vowel sound ’ah’, the accuracy was 99 percent.

“Now we’re kind of hopeful that some of this will be useful for actual applications,” add the paper’s senior author.

The project originated in a master’s thesis by Dubnov’s son Tammuz, who completed his M.S. degree in engineering sciences in UC San Diego’s Mechanical and Aerospace Engineering department in 2020. His topic: “Signal Analysis and Classification of Audio Samples from Individuals Diagnosed with COVID-19”. Tammuz completed his degree while running a startup, Zuzor, which he founded in 2015.

“My thesis was using artificial intelligence and audio, then COVID happened,” recalls the UC San Diego alumnus. “So that was kind of the genesis of it. I was already doing a lot of work in deep learning, and I was already doing a lot of machine learning before that. So I wondered: how we could we use this maybe to diagnose something?”

After completing his thesis, Tammuz wanted to continue the research and approached his father at UC San Diego, who was also focused on machine learning and audio – but for different applications. “I’ve been working on voice or musical instrument sounds, but also on singing and spoken voice,” said Dubnov, a longtime participant in the Qualcomm Institute at UC San Diego. “We’re talking pretty much about sound texture, of the little details of sound, the repetition of small sound elements. We had these techniques as part of our research toolkit, so it was sort of a leap of faith to see if we could actually discriminate between COVID and non COVID.”

The machine-learning models developed for the study were “trained” on data (self-submitted audio samples) collected by the Corona Voice Detect project, a partnership between Carnegie Mellon University and the Israel-based company Voca.ai. The recordings contained audio of each individual coughing three times and then uttering the sustained vowel “ah”.

The researchers applied a combined method from nonlinear dynamics to detect COVID-19 in vocal signals – offering a reliability that could also allow detection at an earlier stage. “The robust results of our model have the potential to substantially aid in the early detection of the disease on the onset of seemingly harmless coughs,” said professor Dubnov.

The researchers insist that their model is not intended for independently diagnosing COVID-19. “I wouldn’t rely on an AI to diagnose or not diagnose,” insisted Tammuz. “We’re hoping more to position it like an early warning system for doctors.”

Shlomo Dubnov agrees. “It would hopefully help physicians decide whether standard tests may be necessary for a diagnosis,” he said. “It also offers a non-invasive way to do surveillance testing at the population level, including analyzing vocal sounds from microphone arrays in public spaces.”

“COVID is a terrible respiratory disease that we’re all facing now, but I’m optimistic that the vaccine will work and COVID will hopefully be a thing of the past,” said Tammuz. “But as part of this project, I ended up talking to a lot of doctors and maybe the bright side of all this is that this technology can be used for other diseases.”

Indeed, there are audio signatures associated with a broad array of medical conditions, ranging from asthma and chronic obstructive pulmonary disease to bronchitis, tuberculosis and congestive heart failure. “We have a whole list of diseases that it could be beneficial for,” he explained. “So that’s kind of the commercial opportunity here.”

Professor Dubnov is now teaming with his son’s software company Zuzor on a joint proposal for a Small Business Innovation Research (SBIR) seed grant from the National Science Foundation. Their goal: to aggregate much larger datasets related to different diseases – data they will need in order to train models to do much deeper feature extraction that, for instance, would allow them to differentiate cough sounds across many different diseases.

Said Zuzor’s Tammuz Dubnov: “There are diseases that have a huge impact on many, many lives, so if we can help even a little bit with that, that could have really big implications.”

___________

*Mouawad, P., Dubnov, T. & Dubnov, S. Robust Detection of COVID-19 in Cough Sounds. SN COMPUT. SCI. 2, 34 (2021).